Training Aware Sigmoidal Optimization

Photo by Authors

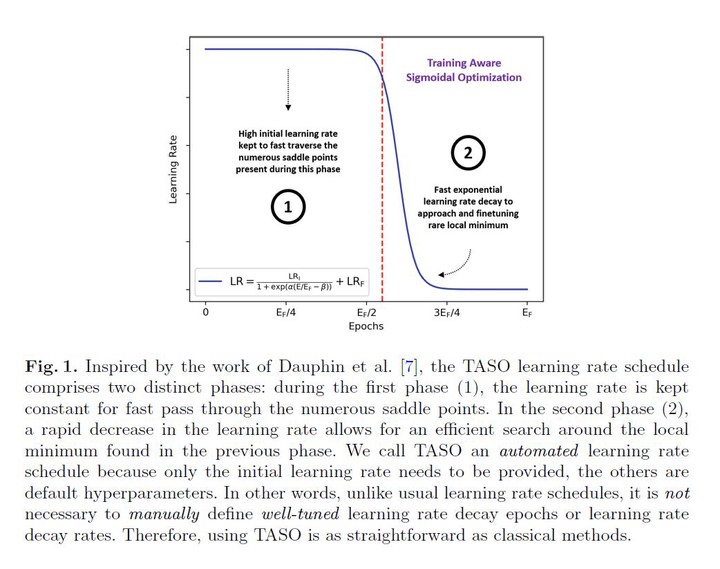

Photo by AuthorsProper optimization of deep neural networks is an open research question since an optimal procedure to change the learning rate throughout training is still unknown. Manually defining a learning rate schedule involves troublesome, time-consuming try and error procedures to determine hyperparameters such as learning rate decay epochs and learning rate decay rates. Although adaptive learning rate optimizers automatize this process, recent studies suggest they may produce overfitting and reduce performance compared to fine-tuned learning rate schedules. Considering that deep neural networks loss functions present landscapes with much more saddle points than local minima, we proposed the Training Aware Sigmoidal Optimizer (TASO), consisting of a two-phase automated learning rate schedule. The first phase uses a high learning rate to fast traverse the numerous saddle point, while the second phase uses a low learning rate to approach the center of the local minimum previously found slowly. We compared the proposed approach with commonly used adaptive learning rates schedules such as Adam, RMSProp, and Adagrad. Our experiments showed that TASO outperformed all competing methods in both optimal (i.e., performing hyperparameter validation) and suboptimal (i.e., using default hyperparameters) scenarios.