Entropic Out-of-Distribution Detection

Photo by Authors

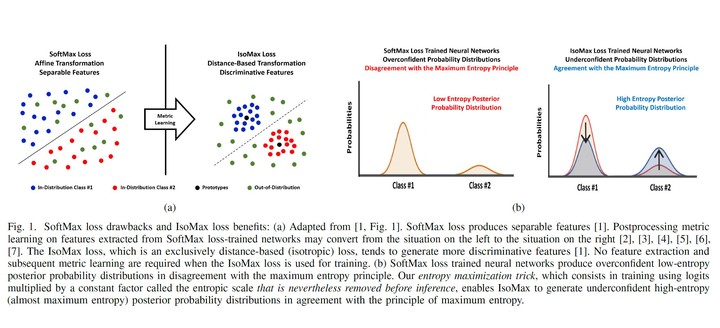

Photo by AuthorsOut-of-distribution (OOD) detection approaches usually present special requirements (e.g., hyperparameter validation, collection of outlier data) and produce side effects (e.g., classification accuracy drop, slower energy-inefficient inferences). We argue that these issues are a consequence of the SoftMax loss anisotropy and disagreement with the maximum entropy principle. Thus, we propose the IsoMax loss and the entropic score. The seamless drop-in replacement of the SoftMax loss by IsoMax loss requires neither additional data collection nor hyperparameter validation. The trained models do not exhibit classification accuracy drop and produce fast energy-efficient inferences. Moreover, our experiments show that training neural networks with IsoMax loss significantly improves their OOD detection performance. The IsoMax loss exhibits state-of-the-art performance under the mentioned conditions (fast energy-efficient inference, no classification accuracy drop, no collection of outlier data, and no hyperparameter validation), which we call the seamless OOD detection task. In future work, current OOD detection methods may replace the SoftMax loss with the IsoMax loss to improve their performance on the commonly studied non-seamless OOD detection problem.