Improving Universal Language Model Fine-Tuning using Attention Mechanism

Photo by Authors

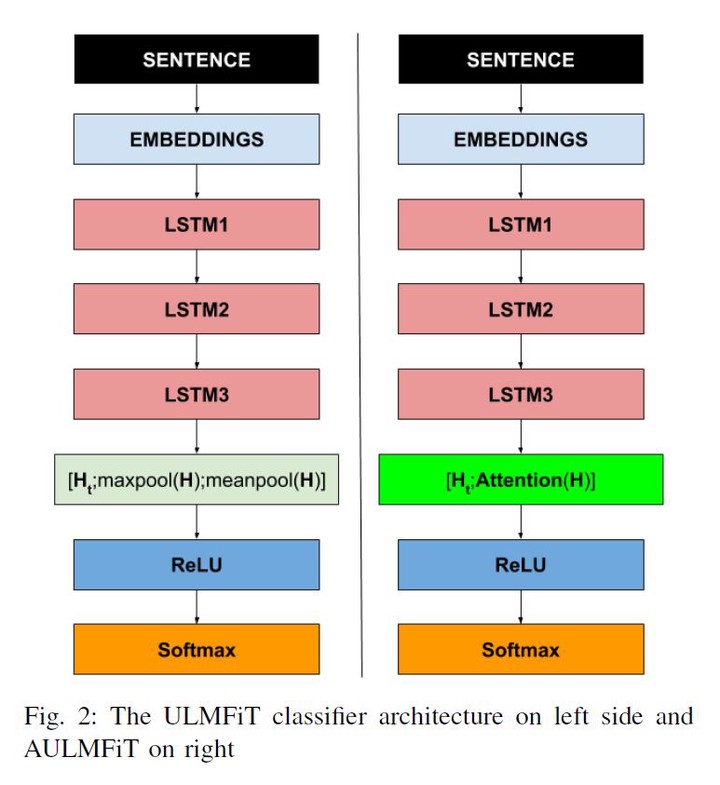

Photo by AuthorsInductive transfer learning is widespread in computer vision applications. However, in natural language processing (NLP) applications is still an under-explored area. The most common transfer learning method in NLP is the use of pre-trained word embeddings. The Universal Language Model Fine-Tuning (ULMFiT) is a recent approach which proposes to train a language model and transfer its knowledge to a final classifier. During the classification step, ULMFiT uses a max and average pooling layer to select the useful information of an embedding sequence. We propose to replace max and average pooling layers with a soft attention mechanism. The goal is to learn the most important information of the embedding sequence rather than assuming that they are max and average values. We evaluate the proposed approach in six datasets and achieve the best performance in all of them against literature approaches.