Reducing Squeezenet Storage Size with Depthwise Separable Convolutions

Photo by Authors

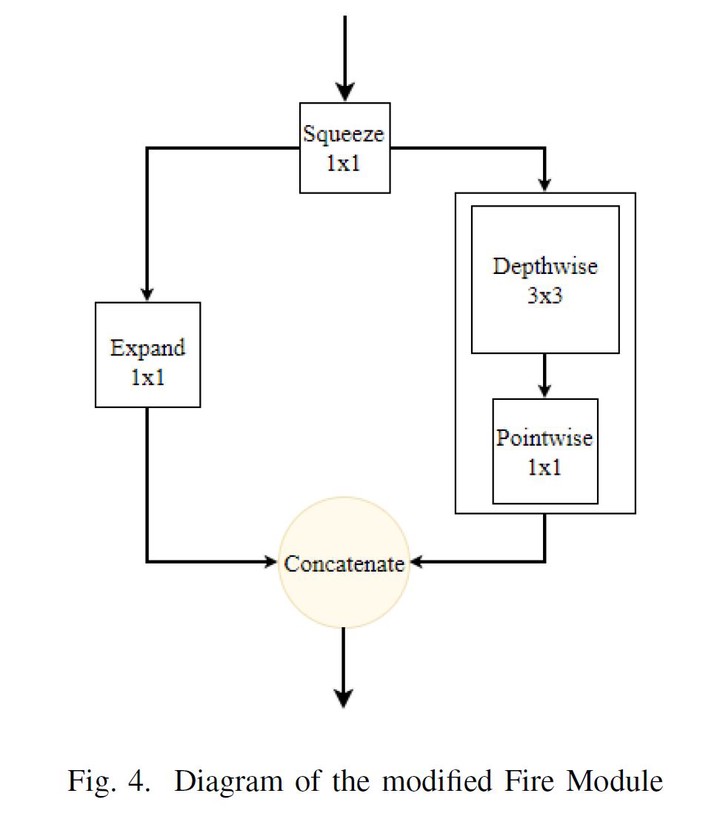

Photo by AuthorsCurrent research in the field of convolutional neural networks usually focuses on improving network accuracy, regardless of the network size and inference time. In this paper, we investigate the effects of storage space reduction in SqueezeNet as it relates to inference time when processing single test samples. In order to reduce the storage space, we suggest adjusting SqueezeNet’s Fire Modules to include Depthwise Separable Convolutions (DSC). The resulting network, referred to as SqueezeNet-DSC, is compared to different convolutional neural networks such as MobileNet, AlexNet, VGG19, and the original SqueezeNet itself. When analyzing the models, we consider accuracy, the number of parameters, parameter storage size and processing time of a single test sample on CIFAR-10 and CIFAR-100 databases. The SqueezeNet-DSC exhibited a considerable size reduction (37% the size of SqueezeNet), while experiencing a loss in network accuracy of 1,07% in CIFAR-10 and 3,06% in top 1 CIFAR-100.